February 22, 2025 | Menlo Park, California

Meta issued an apology on Thursday after an “error” caused Instagram users to see an influx of violent and graphic content in their Reels feed. The company confirmed that the issue had been resolved and assured users remained committed to content moderation.

“We have fixed an error that caused some users to see content in their Instagram Reels feed that should not have been recommended. We apologize for the mistake,” a Meta spokesperson said.

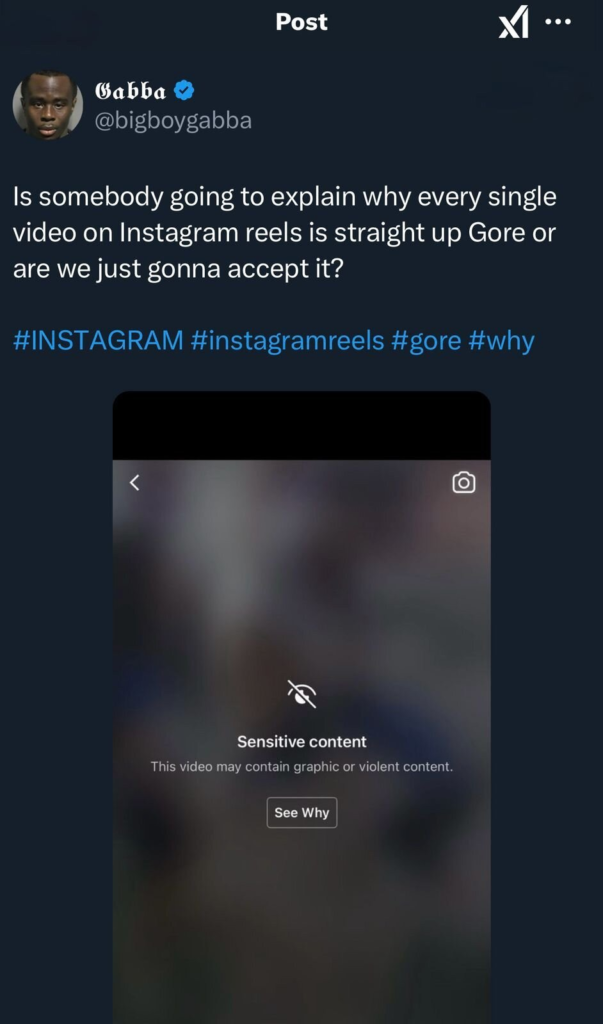

Users across various platforms have complained about explicit videos appearing despite enabling Instagram’s highest “Sensitive Content Control” settings. Some reported encountering disturbing clips of violence, killings, and cartel-related content recommended repeatedly.

Meta’s policies typically prohibit content depicting extreme violence, including dismemberment, visible innards, or charred bodies. The company does allow some graphic content for awareness purposes but applies warning labels and restricts underage users from viewing it.

The glitch came shortly after Meta announced changes to its content moderation policies, focusing on reducing censorship. In January, the company revealed it would shift automated systems away from detecting all violations to prioritizing illegal and severe cases, such as terrorism, child exploitation, and fraud. Less severe violations would rely on user reports instead.

CEO Mark Zuckerberg also announced plans to allow more political content and revise the company’s third-party fact-checking program, moving to a “Community Notes” model similar to Elon Musk’s platform, X. The changes have been widely viewed as an effort to repair Meta’s relationship with former U.S. President Donald Trump, who has criticized its content moderation policies.

Critics argue that Meta’s evolving approach raises concerns about the effectiveness of its content moderation, particularly after the company laid off 21,000 employees in 2022 and 2023, including members of its civic integrity and safety teams.

Meta has faced repeated scrutiny over its moderation policies, from misinformation and illicit activities to failures in curbing violence in conflict zones like Myanmar and Ethiopia. The latest incident has reignited questions about whether Meta’s shift away from aggressive content policing could lead to more harmful material appearing on its platforms.

Following the backlash, Meta reiterated its commitment to user safety. “We apologize for the mistake,” the company stated, assuring users that it would continue refining its moderation systems to prevent similar incidents.

Keep reading Questiqa.us and Get more News Headlines on Our Social Platforms And Do Follow.

Average Rating